Working as a cloud engineer across multiple teams, I've seen the same pattern repeat itself: infrastructure costs slowly creep up, and by the time anyone notices, the AWS bill has doubled.

It's never one dramatic incident. It's the accumulation of dozens of reasonable decisions that compound over time.

- Week 1: Add an RDS instance for the new feature.

- Week 3: Scale up an EC2 instance for "temporary" testing.

- Week 5: Add an ElastiCache cluster to improve performance.

- Month 3: The AWS bill has doubled. But which changes caused it?

The Insight Dilution Problem

Each infrastructure change makes perfect sense in isolation. A developer adds what they need, the code gets reviewed for bugs and security issues, and it ships to production.

But here's what happens to cost visibility:

When you make 50+ infrastructure changes over three months, the connection between individual decisions and the overall bill becomes invisible. Sure, you can see the total went up. But which specific changes were expensive? Which architectures are inefficient? Where should you optimize?

This is what I call the insight dilution problem: the more changes you make, the harder it becomes to understand the cost impact of any single decision.

By the time you're looking at the AWS bill, you're trying to untangle three months of compounded architectural decisions. It's like debugging production code without logs.

The Production Trap

Once you discover a cost problem, you're stuck in what I call the production trap.

You need to make changes to reduce costs. But these services are already running in production. They have dependencies. Customers rely on them. Changing them means:

- Stress: What if reducing instance size causes performance issues?

- Time: Change management, approvals, testing, gradual rollouts

- Opportunity cost: Engineers spending weeks optimizing instead of building

The further up the CI/CD chain you are, the harder it is to change anything.

Time to Change vs Pipeline Stage

Production ████████████████ (Weeks, high stress)

Staging ████████ (Days, medium effort)

CI/CD ████ (Hours, some coordination)

Code Review ██ (Minutes, easy changes)

Local Dev █ (Seconds, immediate)

When cost problems surface in production, you're operating in the most expensive, slowest, highest-stress part of this curve.

The FinOps Consultant Band-Aid

At one client, we hired FinOps consultants to help clean up our AWS costs. They were excellent at their job. They analyzed our infrastructure, identified waste, and recommended changes.

But here's what struck me: we were treating the symptom, not the cause.

The consultants would recommend downsizing an RDS instance or removing unused resources. Then we'd spend weeks implementing those changes in production. Meanwhile, developers continued making new infrastructure decisions without cost visibility, creating tomorrow's optimization work.

It was a reactive cycle:

- Developers build infrastructure without cost feedback

- Costs accumulate in production

- FinOps team analyzes and recommends fixes

- Engineering team spends weeks making production changes

- Repeat

We were cleaning up messes after they'd already materialized in production, when they were most expensive and time-consuming to fix.

The insight: What if we prevented the mess in the first place?

We Already Shift Left for Everything Else

This realization made me notice a pattern: we've successfully "shifted left" for almost every other engineering concern.

Security: We run Checkov in CI/CD to catch security issues before deployment. We don't wait for penetration testers to find problems in production.

Best practices: We use cdk-nag to validate CDK constructs during development. We don't wait for architecture reviews to catch issues.

Code quality: We use linters, type checkers, and unit tests in our IDEs. We don't wait for QA to find bugs.

Compliance: We use policy-as-code to validate configurations in CI/CD. We don't wait for audits to find violations.

For all of these, we learned that catching issues early is:

- Faster: Seconds to minutes instead of days to weeks

- Cheaper: No production impact, no rollback costs

- Less stressful: Change a line of code vs coordinate a production change

- Educational: Developers learn from immediate feedback

So why not cost?

The Missing Feedback Loop

Developers today make infrastructure decisions in a vacuum. It's like coding without a compiler - you don't find out if it works until runtime.

You write Terraform or CDK code that provisions an m5.24xlarge instance. The code looks fine. It passes review. It deploys successfully. Then three weeks later, someone notices that instance costs $8,000/month.

The same feedback gap exists for security infrastructure. Enable CloudTrail data events in your IaC code, and three weeks later you discover it's costing $600/month at $0.10 per 100,000 events - a cost you could have caught during code review.

The developer had no way to know. They made a reasonable choice with the information available. And by the time anyone noticed, changing it means navigating the production trap.

The feedback loop is broken. The gap between the decision (instance size in code) and the feedback (cost on AWS bill) is weeks or months. During that time, context is lost, the architecture solidifies, and changing becomes exponentially harder.

Compare this to security scanning:

- Developer commits code with hardcoded secret

- Checkov flags it in CI/CD immediately

- Developer removes secret, commits again

- Total time: 2 minutes

Imagine if security worked like cost:

- Developer commits hardcoded secret

- Code deploys to production

- Three weeks later, security team notices during audit

- Now you need incident response, key rotation, log analysis

- Total time: days or weeks

We'd never accept that for security. Why do we accept it for cost?

The Vision: Cost Visibility at Code Review Time

Here's what the ideal workflow looks like:

- Developer writes infrastructure code:

new ec2.Instance({ instanceType: 't3.large' }) - Opens a pull request

- Automated comment appears: "This change adds $61/month to your AWS bill"

- Team discusses: "Do we need t3.large? Let's test with t3.medium first"

- Developer adjusts:

instanceType: 't3.medium' - Comment updates: "This change adds $31/month"

- Team approves, PR merges

These EC2 pricing estimates include only compute costs. Factor in EBS volumes, data transfer, and other hidden costs that typically add 30-50% to the total. Similar hidden costs exist for security services - enabling CloudTrail Lake might show $768/month for ingestion, but querying that data adds $0.005/GB scanned. The same applies to AI services, where Amazon Bedrock pricing layers Knowledge Base, Agent, and Guardrails fees on top of per-token model costs.

Notice what happened:

- Decision point: The conversation happened during code review, when change is easiest

- Context: Everyone remembers why this instance exists and what it needs

- Learning: Developer now knows t3.large ≈ $60/month, t3.medium ≈ $30/month

- Prevention: No production optimization needed later

This is shift-left for FinOps: bringing cost feedback into the development workflow, where architectural decisions are still flexible.

Developer Ownership Through Information

Here's what I learned: developers want to make cost-efficient decisions. They just don't have the information.

When you tell a developer "that instance is costing us $8k/month in production," they feel bad. They made an uninformed decision that turned out expensive, and now fixing it is complicated.

But when you show them "t3.large = $60/month, m5.xlarge = $140/month" during code review (using CDK cost estimation tools), they make informed decisions. They might say:

- "Let's start with t3.large and scale if needed"

- "Actually, we only need this during business hours"

- "Can we use Lambda instead of a dedicated instance?"

Cost visibility doesn't restrict developers. It empowers them.

Instead of FinOps as a gatekeeping function ("you can't use that, it's too expensive"), it becomes an information function ("here's what things cost, make informed decisions").

The team owns the cost decisions. They see the tradeoffs. They learn AWS pricing through daily exposure. Cost efficiency becomes a natural part of code review, not a separate optimization phase.

Introducing CloudBurn

This is why I built CloudBurn: to shift FinOps left into the code review process.

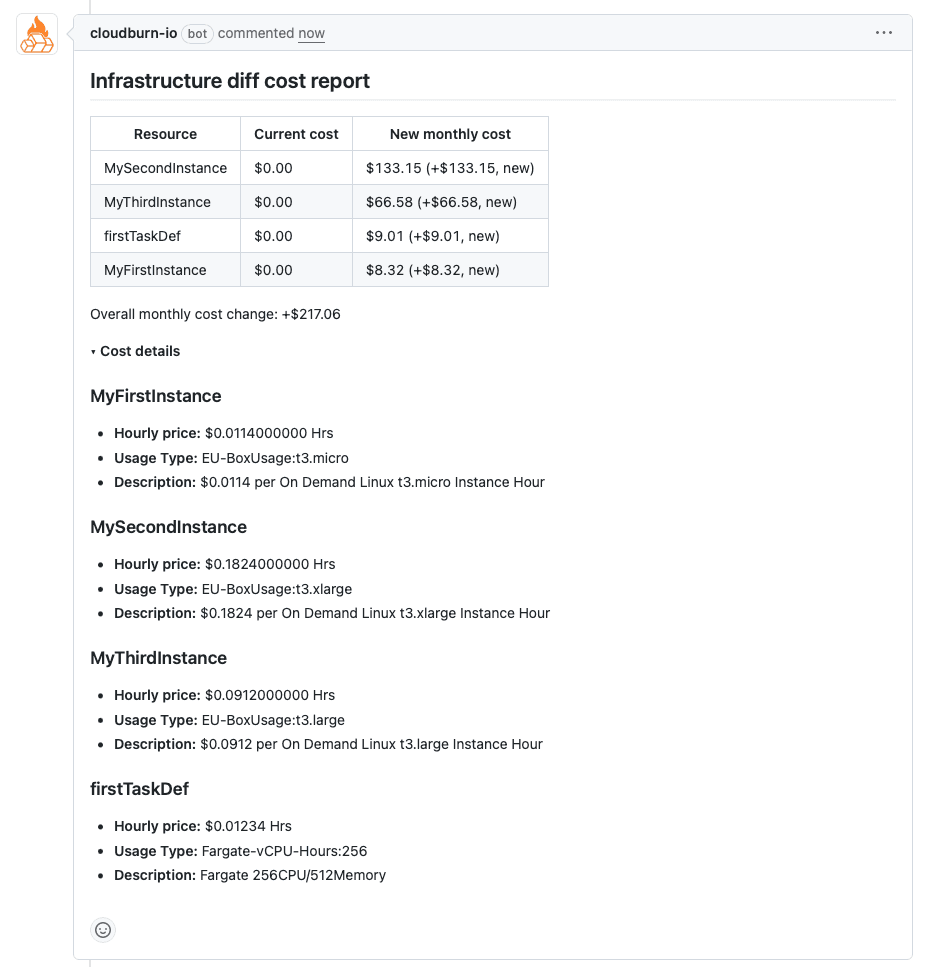

CloudBurn is a GitHub App that automatically analyzes infrastructure changes in pull requests and posts cost impact comments. It works with both AWS CDK and Terraform.

When someone opens a PR with infrastructure changes, CloudBurn:

- Extracts the resources being added, modified, or removed

- Determines the properties (instance types, storage sizes, etc.)

- Queries AWS Pricing API for accurate costs

- Posts a comment showing the cost impact

The comment shows:

- Old monthly cost

- New monthly cost

- Delta (what this PR adds or saves)

- Breakdown by resource

No extra steps for developers. No dashboards to check. No manual cost calculations. Just automatic visibility during the natural review process.

The result: Cost discussions happen during code review, when change is easy. Problems get prevented instead of fixed later in production.

Why This Matters Now

Cloud costs are now 20-30% of most tech companies' budgets - second only to headcount. Every team is making infrastructure decisions daily through Terraform, CDK, Pulumi, and other IaC tools.

The gap between those decisions and their financial impact creates a disconnect:

- Developers optimize for features and performance

- Finance teams react to unexpected bills

- FinOps consultants clean up afterward

- The cycle repeats

Shifting left breaks this cycle. When developers see cost impact during code review:

- Better architectural decisions from the start

- No surprise bills

- No expensive production optimization

- No consultant cleanup needed

The compounding effect is enormous. Every $100/month prevented is $1,200/year saved, forever. Small decisions compound into massive savings.

The Shift-Left Principle

The pattern is clear across every discipline:

Shift left from pentesting → Checkov Result: Fewer vulnerabilities reach production

Shift left from architecture review → cdk-nag Result: Faster feedback, fewer rework cycles

Shift left from FinOps cleanup → CloudBurn Result: Prevention vs cleanup, developer ownership

The principle is simple: catch issues when they're easy to fix, not when they're expensive to fix.

For cost, that moment is code review. Not production. Not staging. Not even CI/CD. Code review is the sweet spot where:

- Architectural decisions are still flexible

- Team context is fresh

- Change takes seconds, not weeks

- Discussion is natural and collaborative

Start Preventing, Stop Fixing

If your team builds infrastructure on AWS using CDK or Terraform, you're making cost decisions daily whether you realize it or not.

The question is: do you want to discover those decisions' impact during code review, when change is a one-line edit?

Or in production three months later, when change requires weeks of coordination?

The cloud is too expensive to optimize reactively.

It's time to shift FinOps left - where it belongs, alongside security, quality, and compliance. Give your developers cost visibility during code review. Turn prevention into a workflow, not an afterthought.

The consultants can focus on strategy instead of cleanup. Your developers will make better decisions with better information. And your AWS bill will reflect intentional choices, not accumulated accidents.